Today, one of the biggest barriers to using advanced data analytics is not skill or technology, but simply access to data and the ability to quickly and inexpensively build queries. In this post, we explain what data silos are and why they are a clear problem for today’s organizations. Discover how solving this problem can deliver significant benefits.

Many CIOs and managers at well-known companies tell us they are excited about the potential of analytics for their business. But they can’t always get their hands on the data. Using data as a competitive advantage is a necessity for today’s businesses, so why is it so difficult to get the data we need?

There is a cost to using data. Behind the ideal of powerful analytic insights lies an accumulation of tedious data preparation. In fact, since the advent of data science, its practitioners have stated that 80% of the work involved is data acquisition and preparation. Despite the best efforts of software vendors to create self-service data preparation tools, this proportion of work is likely to remain the same for the foreseeable future, simply because organizations have not been able to solve this problem with traditional software.

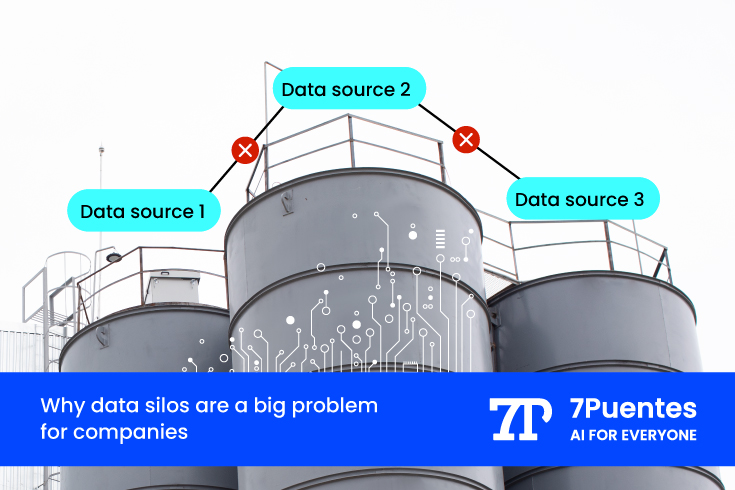

The word «silo» usually comes from the concept of a structure used to store bulk materials, primarily agricultural products, grains that are not mixed in each compartment. In the case of data, this silo metaphor can be applied to the scope of the different departments or areas of a company, even more so if it is a large company where different sectors coexist, such as human resources, operations, quality, sales, marketing, strategy, etc.

And clearly, when data is «siloed» within a team, it can have a number of negative effects, such as

- Limited collaboration across the organization. Teams make decisions based on the information they have. If they only have access to the data available in their department, they will miss opportunities to collaborate with other teams and achieve the common business goal.

- Data accuracy and credibility. Isolated data can quickly become outdated and provide inaccurate information about customers.

- Incorrect analysis. When data resides in different databases or spreadsheets, it is virtually impossible to perform enterprise-level analysis and get a 360-degree view of the customer base.

- Poor customer experience. Customers expect the information they provide to be available to all departments in an organization. Yet, according to industry studies, more than 60% of customers still report having to repeat or re-explain information to different company representatives most of the time.

Bottom line: Data silos can lead to a lack of visibility, efficiency, and trust within the organization and with customers. This leads to a repetitive process where the analyst must access each silo individually, reconcile information between silos, and generate new insights. This process is expensive and must be repeated for each need.

Possible traditional approaches to the data silo problem

A common case of this problem is queries. Let’s imagine that the analyst needs information about the absenteeism of his company’s employees and relates this issue to operational failures. This query arises because the company has a lot of breakdowns or accidents in its industrial operations and then needs to collect this information as a priority. However, the human resources silo versus the operations silo threatens to solve this problem.

Currently, one of the common solutions to the problem of data silos is the famous «data lake«. Data lakes are large repositories of data stored in its native form, i.e. unprocessed (structured, semi-structured or unstructured). They store not only data that is currently in use, but also historical data. This data is available for access and subsequent analysis by the organization’s data scientists and data analysts.

Why is it difficult to organize?

Although creating a data lake is easy from a technical point of view, organizing it is complex when we have many silos. Let’s say we have 40 silos, in this case there are 40 different data sources in the same company, giving semantics to each of these data sources is very expensive and can even take months of work. Obviously, these are large areas of BI where aspects such as data governance come into play (where there are issues such as privacy and security of sensitive data), semantics, and the data dictionary, all of which are difficult to achieve.

The advantage of a data lake is that it can be built once and then used. The disadvantage is that it is often a long and expensive process when multiple layers of information are involved. Let’s imagine that we have different layers of access to information such as:

- RealTime/Control

- Application

- Integration

- Analytics

In this case, the analytics layer is where the analyst sits and looks for the relationship between HR and Operations. In this layer, you have to invest time to build the cross section of information, build the query, and build a dashboard. To do this, the analyst has to ask the BI department to cross-reference information from the HR department with operations to see errors in absenteeism, and then the department has to solve the problem. This undoubtedly requires a software development process, which is also expensive.

Final thoughts

Currently, there are many charts and dashboards in companies that are designed to be used only once and for questions that are not repeated. So if the question about absenteeism comes up because there are many operational failures, we do all these reports, but then it took us 3 months to answer it and the question no longer makes sense. If we do not answer these questions Just In Time, we are late and we also lose important resources and financial assets of the company.

Can Generative Artificial Intelligence (GenAI) provide new solutions to this common problem for companies in any industry? In a future article we will answer this question. Stay tuned to our newsletter.